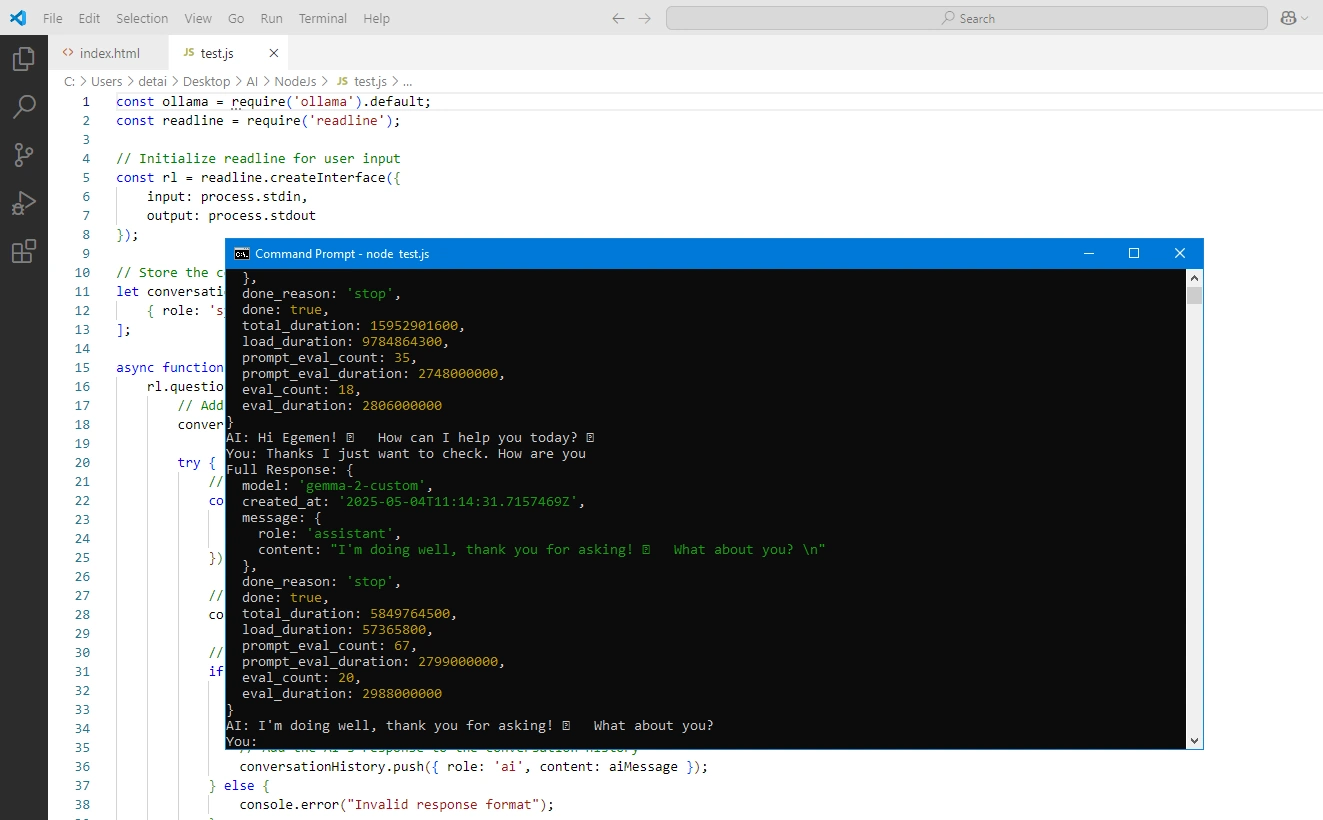

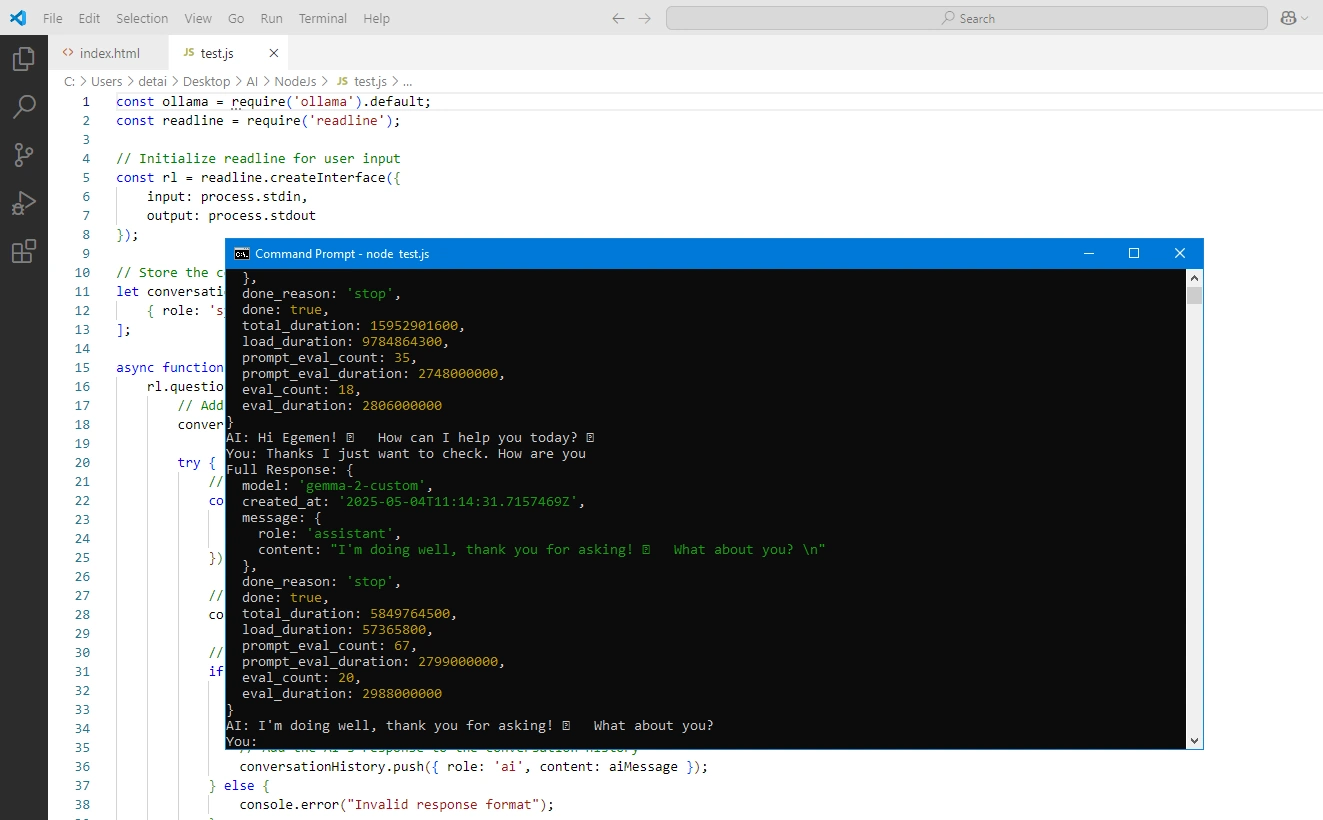

Ollama AI Model Integration

Developing and testing cutting-edge AI models using Node.js and Ollama

Overview:

This project focused on integrating the Ollama framework to run and test LLaMA 3.2 and Gemma 2 language models locally using Node.js, aiming to explore efficient, offline AI model deployment.

Challenges:

Need for a reliable local environment to experiment with large language models

Ensuring compatibility with Node.js-based applications

Balancing performance and memory requirements on development machines

Solution:

Set up and configured the Ollama environment for local model deployment

Integrated LLaMA 3.2 and Gemma 2 models with a custom Node.js backend

Created sample prompts and responses to validate model behavior and performance

Results:

Successfully deployed and tested both models in a local, offline setup

Laid the foundation for future integration of AI into real-time applications